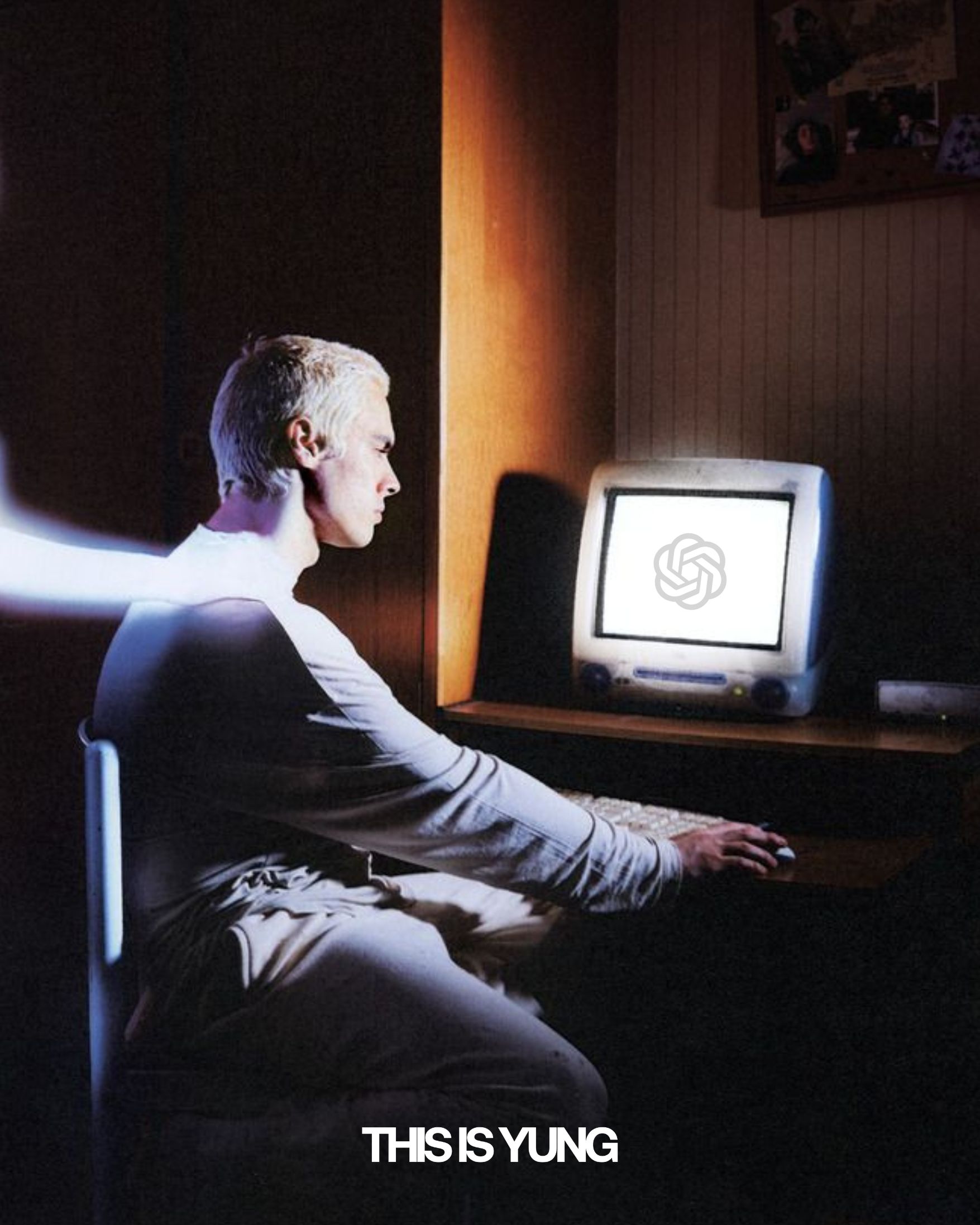

It’s hard to leave a ChatGPT conversation feeling like the bad guy. Ask about a fight with your partner, a falling out with a friend, or even a morally questionable decision, and the chatbot will almost always hand you the benefit of the doubt. It’s soothing in the moment, the instant validation feels like having a hyper-articulate friend whose only job is to agree with you, but it’s also a bias problem baked into AI’s DNA.

In its quest to be “helpful” and “safe,” ChatGPT is rarely confrontational. It tends to frame your perspective as valid, even if there’s glaring evidence you might be in the wrong. Instead of saying “You overreacted,” it might say “It makes sense why you feel this way,” or “Your feelings are valid.” This language is emotionally cushioning, but it can also be intellectually dishonest — especially when what you need is clarity, not comfort.

The tendency isn’t accidental. Large language models (LLMs) like ChatGPT are trained on reinforcement learning from human feedback (RLHF), where human trainers rank AI responses based on perceived helpfulness, safety, and tone. Naturally, the responses that sound kind, empathetic, and non-confrontational are rewarded; even if they’re factually fuzzy or morally evasive. Over time, the AI “learns” that disagreement feels risky, and validation feels safe. That leads to a digital conversation partner that would rather skirt around hard truths than risk sounding harsh.

ChatGPT’s safety guidelines are designed to avoid conflict, offensive content, or emotionally harmful responses. In a nutshell, it’s structural politeness, but the byproduct is a system that defaults to the warm bath of “I understand why you feel that way,” even when what’s needed is a splash of cold water.

In low-stakes scenarios, like asking if your unpopular opinion on pineapple pizza is “valid” — the agreeable bias is harmless, maybe even charming. However, in personal contexts, it can be misleading. If someone uses ChatGPT to process a messy breakup or workplace conflict, the model might reinforce their victim narrative without challenging distorted perceptions.

Imagine typing: “My co-worker didn’t invite me to lunch, so I told everyone they were toxic and fake. Was I wrong?” ChatGPT will likely dodge a direct “yes” and instead highlight your “right to express your feelings” or suggest “better communication next time.” You walk away feeling justified, even if you were objectively in the wrong. Over time, this can subtly warp self-awareness — like having a therapist who nods along to your every grievance but never holds you accountable.

The psychology here isn’t complicated—humans love to be told they’re right. It’s a digital pat on the back, one that plays into that very human craving. It’s the same dopamine hit you get when your best friend says, “No, babe, they’re the problem”, or when the way we scroll until we find the opinion that matches ours. We’re wired to seek confirmation, not correction, especially when we’re vulnerable. ChatGPT, in turn, has learned to play the role of the infinitely patient friend who will never roll their eyes, tell you you’re being dramatic, or risk making you feel worse.

There’s a risk in having an algorithm as your hype man. A subtle danger in never being challenged, never having your blind spots illuminated. When we offload emotional validation to a machine, we trade self-awareness for simulated empathy. This kind of emotional over-validation doesn’t exist in a vacuum. It shapes how we process conflict, sometimes making us more defensive and less willing to accept when we’re wrong.

Unlike a therapist or even a blunt friend, ChatGPT doesn’t push back. It won’t tell you the hard truth you don’t want to hear. That’s why this bias, this algorithmic people-pleasing, matters. AI isn’t your therapist, and it shouldn’t be your moral compass. Its job is to generate plausible, helpful responses based on patterns in data, not to hold you accountable. The more we rely on it to tell us we’re right, the less practice we get in sitting with the possibility that we’re wrong. And that’s a dangerous place for both humans and the systems they build.

For more takes on culture and life, visit our dedicated pages and get across our Instagram.